Published OnAugust 22, 2025August 18, 2025

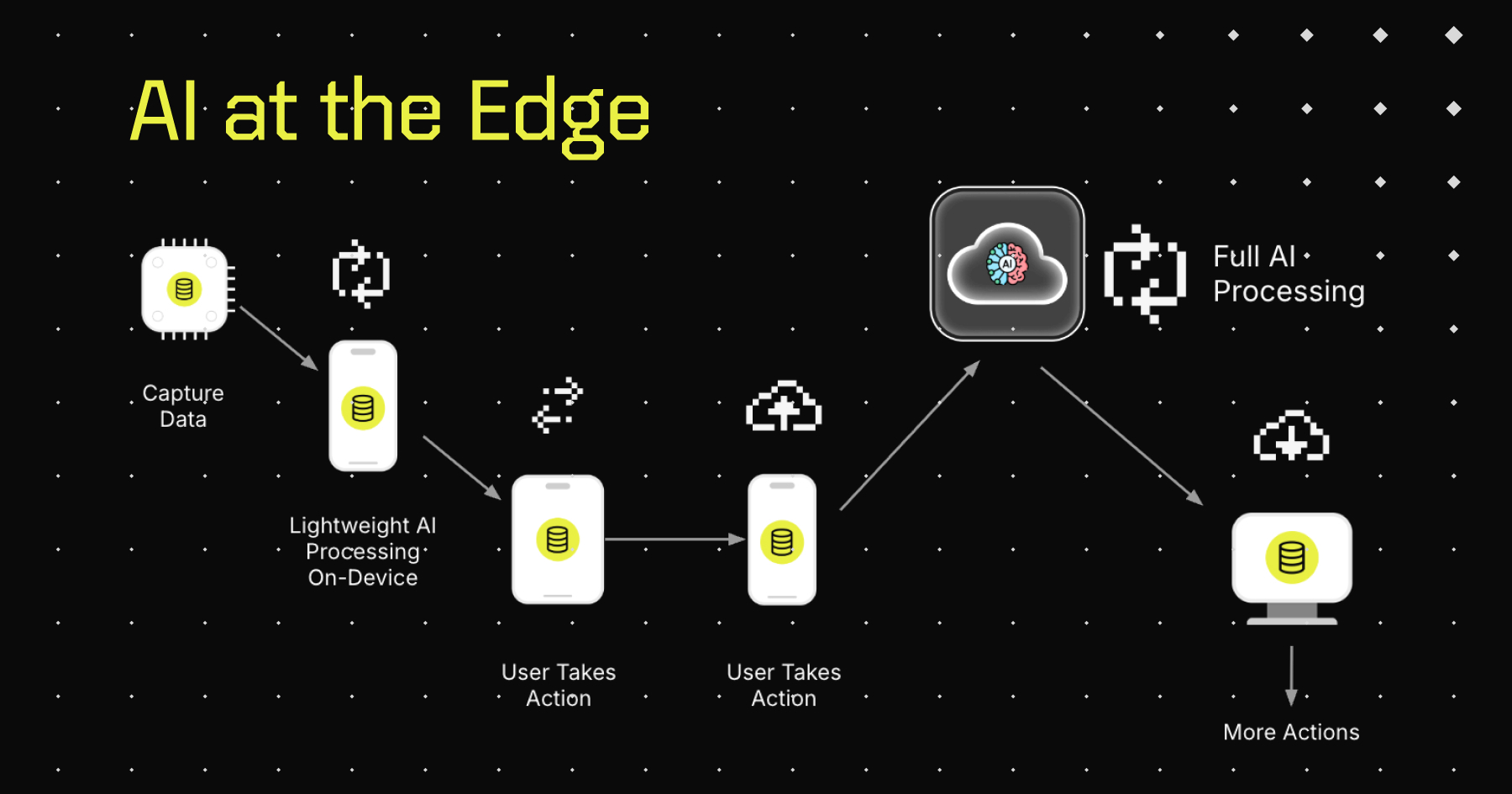

AI at the Edge: Bringing Disruptive Intelligence to Disruptive Environments

Can hybrid edge-AI enable deskless workers to stay productive and collaborative, even without cloud connectivity?

I recently delivered a webinar, AI at the Edge: Bringing Disruptive AI to Disruptive Environments. The session explored how businesses can unlock the power of AI for deskless workers operating in environments where connectivity isn’t guaranteed.

Why Edge AI Matters

While AI is transforming industries, many organizations overlook a critical segment of the workforce: deskless workers. These employees, across construction, logistics, retail, and hospitality, generate massive amounts of data in the field. Yet, poor or intermittent connectivity often prevents them from leveraging cloud-only AI solutions.

Empowering these workers with edge-based AI can improve productivity, staff satisfaction, and decision-making. With modern mobile devices and rugged tablets now powerful enough to run local AI models, organizations can bridge the gap between the cloud and the edge.

Overcoming Barriers

There are a number of challenges, but I believe the two most significant are:

- Connectivity: Edge locations often suffer from zero or intermittent connectivity, making cloud-dependent AI unreliable.

- Infrastructure: Legacy systems and limited compute power at the edge hinder real-time AI adoption.

I recommend a solution based on a hybrid approach. Process what’s possible locally, while syncing raw data and AI outputs to the cloud for deeper analysis and training.

Real-World Use Cases

The opportunity, and challenges, are present across many industries:

- Construction: Workers capture site data that AI processes to assist engineers in making quick, regulation-compliant decisions.

- Retail: Edge AI enhances self-checkout systems, reducing fraud while improving customer experience.

- Supply Chain: Real-time AI-driven inventory insights improve forecasting and reduce costs.

- Healthcare: Faster, more accurate AI-powered diagnostics are already driving adoption in traditionally cautious industries.

Live Questions from the Webinar

I kept the session to just 30min, so I didn’t have time to answer all the questions that came in from the audience. Here are all the questions that were asked, and my answers:

Question #1:

What AI Models have you explored using on Edge Devices (mobile or IoT comute platforms), and is there a technology implementation pathway that has worked so far?

Answer:

Primarily I have tested computer vision models, but have run very small parameter LLMs as well. This have been done from Samsung QRB5 mobile processor to RPi5 processor. Technology wise, using PyTorch is the best way to go for starting out and even at scale due to the major open source support for the project. Implementation of ditto is up to the user to decide on where in the training loop it would be best suited. For data engineering / science gathering data and storing it in ditto is good as it’s extremely simple to tie “metadata” back to the attachment because of the document (really powerful - imagine keeping a live update able document of all data associated with an image and then needing only subscriptions to get what you need). The other side of that is what we generally call “contact reports” - as data comes in and inference is run, you can collect that data for your applications purposes and/or even for Labelling and retraining later.

Generally the stack would be:

- Raw Data

- Ditto

- Model

- Ditto

- PyTorch (inference)

- Inference Data

- Ditto

- User Facing Application

Question #2:

OK, Local APIs first. How about MCP options residing on an IoT Device?

Answer:

This could definitely be done. Honestly something in the edge server product would be very interesting here.

Question #3:

Do you have any demo that show your solution in action in a realistic scenario?

Answer:

It all depends on what you want to see. Let me show you something custom! Fill out this form and I’ll show you a demo later this week.

Question #4:

Latent AI and Ditto? What would that partnership bring to developers using Ditto?

Answer:

Read all about our partnership with Latent AI here.

Question #5:

For data security and privacy wouldn’t it be a positive step to use a model from ollama or huggingface to pull them in and run them there? The API portion, even while encrypted, still transmits *something* to the API provider. This can be frowned upon for some regulatory use cases.

Answer:

This is already being done within Ditto. Local first always, and Ollama can be setup as agentic so we can actually give it live access to generate queries for the local DB.

Looking Ahead

The rise of powerful edge devices and vendor-provided APIs is accelerating adoption. But success depends on collaboration, so that AI insights must be shared across teams, avoiding the creation of new “AI silos.”

AI at the edge isn’t just about technology– it’s about equipping workers in the toughest environments with the same intelligent tools available in the office. By adopting hybrid architectures and rethinking AI strategies, businesses can ensure that critical workers are not left behind.

Want to learn more? Watch the full webinar recording. Still have questions? Request a demo today.